Introduction to Data Structures and Algorithms

Data Structure is a way of collecting and organising data in such a way that we can perform orations on these data in an effective way. Data Structures is about rendering data elements in terms of some relationship, for better organization and storage. For example, we have some data which has, player's name "Virat" and age 26. Here "Virat" is of String data type and 26 is of integer data type.

We can organize this data as a record like Player record, which will have both player's name and age in it. Now we can collect and store player's records in a file or database as a data structure. For example: "Dhoni" 30, "Gambhir" 31, "Sehwag" 33

If you are aware of Object Oriented programming concepts, then a class also does the same thing, it collects different type of data under one single entity. The only difference being, data structures provides for techniques to access and manipulate data efficiently.

In simple language, Data Structures are structures programmed to store ordered data, so that various operations can be performed on it easily. It represents the knowledge of data to be organized in memory. It should be designed and implemented in such a way that it reduces the complexity and increases the efficiency.

Basic types of Data Structures

As we have discussed above, anything that can store data can be called as a data structure, hence Integer, Float, Boolean, Char etc, all are data structures. They are known as Primitive Data Structures.

Then we also have some complex Data Structures, which are used to store large and connected data. Some example of Abstract Data Structure are :

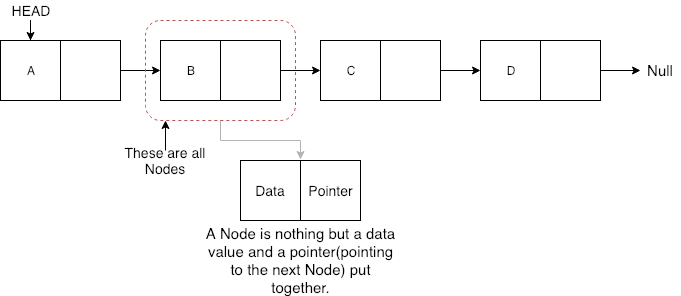

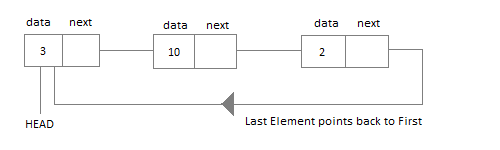

- Linked List

- Tree

- Graph

- Stack, Queue etc.

All these data structures allow us to perform different operations on data. We select these data structures based on which type of operation is required. We will look into these data structures in more details in our later lessons.

The data structures can also be classified on the basis of the following characteristics:

| Characterstic | Description |

|---|---|

| Linear | In Linear data structures,the data items are arranged in a linear sequence. Example: Array |

| Non-Linear | In Non-Linear data structures,the data items are not in sequence. Example: Tree, Graph |

| Homogeneous | In homogeneous data structures,all the elements are of same type. Example: Array |

| Non-Homogeneous | In Non-Homogeneous data structure, the elements may or may not be of the same type. Example: Structures |

| Static | Static data structures are those whose sizes and structures associated memory locations are fixed, at compile time. Example: Array |

| Dynamic | Dynamic structures are those which expands or shrinks depending upon the program need and its execution. Also, their associated memory locations changes. Example: Linked List created using pointers |

What is an Algorithm ?

An algorithm is a finite set of instructions or logic, written in order, to accomplish a certain predefined task. Algorithm is not the complete code or program, it is just the core logic(solution) of a problem, which can be expressed either as an informal high level description as pseudocode or using a flowchart.

Every Algorithm must satisfy the following properties:

- Input- There should be 0 or more inputs supplied externally to the algorithm.

- Output- There should be atleast 1 output obtained.

- Definiteness- Every step of the algorithm should be clear and well defined.

- Finiteness- The algorithm should have finite number of steps.

- Correctness- Every step of the algorithm must generate a correct output.

An algorithm is said to be efficient and fast, if it takes less time to execute and consumes less memory space. The performance of an algorithm is measured on the basis of following properties :

- Time Complexity

- Space Complexity

Space Complexity

Its the amount of memory space required by the algorithm, during the course of its execution. Space complexity must be taken seriously for multi-user systems and in situations where limited memory is available.

An algorithm generally requires space for following components :

- Instruction Space: Its the space required to store the executable version of the program. This space is fixed, but varies depending upon the number of lines of code in the program.

- Data Space: Its the space required to store all the constants and variables(including temporary variables) value.

- Environment Space: Its the space required to store the environment information needed to resume the suspended function.

To learn about Space Complexity in detail, jump to the Space Complexity tutorial.

Time Complexity

Time Complexity is a way to represent the amount of time required by the program to run till its completion. It's generally a good practice to try to keep the time required minimum, so that our algorithm completes it's execution in the minimum time possible. We will study about Time Complexity in details in later sections.

NOTE: Before going deep into data structure, you should have a good knowledge of programming either in C or in C++ or Java or Python etc.

Asymptotic Notations

When it comes to analysing the complexity of any algorithm in terms of time and space, we can never provide an exact number to define the time required and the space required by the algorithm, instead we express it using some standard notations, also known as Asymptotic Notations.

When we analyse any algorithm, we generally get a formula to represent the amount of time required for execution or the time required by the computer to run the lines of code of the algorithm, number of memory accesses, number of comparisons, temporary variables occupying memory space etc. This formula often contains unimportant details that don't really tell us anything about the running time.

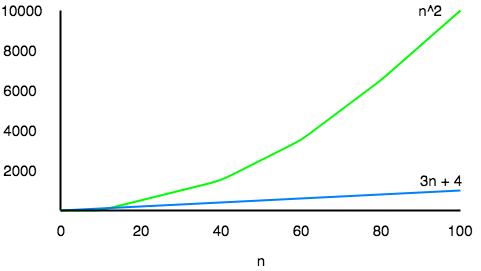

Let us take an example, if some algorithm has a time complexity of T(n) = (n2 + 3n + 4), which is a quadratic equation. For large values of n, the 3n + 4 part will become insignificant compared to the n2 part.

For n = 1000, n2 will be 1000000 while 3n + 4 will be 3004.

Also, When we compare the execution times of two algorithms the constant coefficients of higher order terms are also neglected.

An algorithm that takes a time of 200n2 will be faster than some other algorithm that takes n3 time, for any value of n larger than 200. Since we're only interested in the asymptotic behavior of the growth of the function, the constant factor can be ignored too.

What is Asymptotic Behaviour

The word Asymptotic means approaching a value or curve arbitrarily closely (i.e., as some sort of limit is taken).

Remember studying about Limits in High School, this is the same.

The only difference being, here we do not have to find the value of any expression where n is approaching any finite number or infinity, but in case of Asymptotic notations, we use the same model to ignore the constant factors and insignificant parts of an expression, to device a better way of representing complexities of algorithms, in a single coefficient, so that comparison between algorithms can be done easily.

Let's take an example to understand this:

If we have two algorithms with the following expressions representing the time required by them for execution, then:

Expression 1: (20n2 + 3n - 4)

Expression 2: (n3 + 100n - 2)

Now, as per asymptotic notations, we should just worry about how the function will grow as the value of n(input) will grow, and that will entirely depend on n2 for the Expression 1, and on n3 for Expression 2. Hence, we can clearly say that the algorithm for which running time is represented by the Expression 2, will grow faster than the other one, simply by analysing the highest power coeeficient and ignoring the other constants(20 in 20n2) and insignificant parts of the expression(3n - 4 and 100n - 2).

The main idea behind casting aside the less important part is to make things manageable.

All we need to do is, first analyse the algorithm to find out an expression to define it's time requirements and then analyse how that expression will grow as the input(n) will grow.

Types of Asymptotic Notations

We use three types of asymptotic notations to represent the growth of any algorithm, as input increases:

- Big Theta (Θ)

- Big Oh(O)

- Big Omega (Ω)

Tight Bounds: Theta

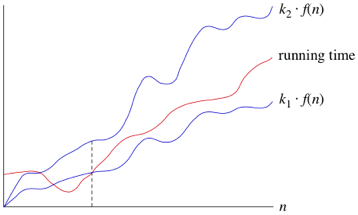

When we say tight bounds, we mean that the time compexity represented by the Big-Θ notation is like the average value or range within which the actual time of execution of the algorithm will be.

For example, if for some algorithm the time complexity is represented by the expression 3n2 + 5n, and we use the Big-Θ notation to represent this, then the time complexity would be Θ(n2), ignoring the constant coefficient and removing the insignificant part, which is 5n.

Here, in the example above, complexity of Θ(n2) means, that the avaerage time for any input n will remain in between, k1 * n2 and k2 * n2, where k1, k2 are two constants, therby tightly binding the expression rpresenting the growth of the algorithm.

Upper Bounds: Big-O

This notation is known as the upper bound of the algorithm, or a Worst Case of an algorithm.

It tells us that a certain function will never exceed a specified time for any value of input n.

The question is why we need this representation when we already have the big-Θ notation, which represents the tightly bound running time for any algorithm. Let's take a small example to understand this.

Consider Linear Search algorithm, in which we traverse an array elements, one by one to search a given number.

In Worst case, starting from the front of the array, we find the element or number we are searching for at the end, which will lead to a time complexity of n, where n represents the number of total elements.

But it can happen, that the element that we are searching for is the first element of the array, in which case the time complexity will be 1.

Now in this case, saying that the big-Θ or tight bound time complexity for Linear search is Θ(n), will mean that the time required will always be related to n, as this is the right way to represent the average time complexity, but when we use the big-O notation, we mean to say that the time complexity is O(n), which means that the time complexity will never exceed n, defining the upper bound, hence saying that it can be less than or equal to n, which is the correct representation.

This is the reason, most of the time you will see Big-O notation being used to represent the time complexity of any algorithm, because it makes more sense.

Lower Bounds: Omega

Big Omega notation is used to define the lower bound of any algorithm or we can say the best case of any algorithm.

This always indicates the minimum time required for any algorithm for all input values, therefore the best case of any algorithm.

In simple words, when we represent a time complexity for any algorithm in the form of big-Ω, we mean that the algorithm will take atleast this much time to cmplete it's execution. It can definitely take more time than this too.

Space Complexity of Algorithms

Whenever a solution to a problem is written some memory is required to complete. For any algorithm memory may be used for the following:

- Variables (This include the constant values, temporary values)

- Program Instruction

- Execution

Space complexity is the amount of memory used by the algorithm (including the input values to the algorithm) to execute and produce the result.

Sometime Auxiliary Space is confused with Space Complexity. But Auxiliary Space is the extra space or the temporary space used by the algorithm during it's execution.

Space Complexity = Auxiliary Space + Input space

Memory Usage while Execution

While executing, algorithm uses memory space for three reasons:

- Instruction Space

It's the amount of memory used to save the compiled version of instructions.

- Environmental Stack

Sometimes an algorithm(function) may be called inside another algorithm(function). In such a situation, the current variables are pushed onto the system stack, where they wait for further execution and then the call to the inside algorithm(function) is made.

For example, If a function

A()calls functionB()inside it, then all th variables of the functionA()will get stored on the system stack temporarily, while the functionB()is called and executed inside the funcitonA(). - Data Space

Amount of space used by the variables and constants.

But while calculating the Space Complexity of any algorithm, we usually consider only Data Space and we neglect the Instruction Space and Environmental Stack.

Calculating the Space Complexity

For calculating the space complexity, we need to know the value of memory used by different type of datatype variables, which generally varies for different operating systems, but the method for calculating the space complexity remains the same.

| Type | Size |

|---|---|

| bool, char, unsigned char, signed char, __int8 | 1 byte |

| __int16, short, unsigned short, wchar_t, __wchar_t | 2 bytes |

| float, __int32, int, unsigned int, long, unsigned long | 4 bytes |

| double, __int64, long double, long long | 8 bytes |

Now let's learn how to compute space complexity by taking a few examples:

{

int z = a + b + c;

return(z);

}In the above expression, variables a, b, c and z are all integer types, hence they will take up 4 bytes each, so total memory requirement will be (4(4) + 4) = 20 bytes, this additional 4 bytes is for return value. And because this space requirement is fixed for the above example, hence it is called Constant Space Complexity.

Let's have another example, this time a bit complex one,

// n is the length of array a[]

int sum(int a[], int n)

{

int x = 0; // 4 bytes for x

for(int i = 0; i < n; i++) // 4 bytes for i

{

x = x + a[i];

}

return(x);

}- In the above code,

4*nbytes of space is required for the arraya[]elements. - 4 bytes each for

x,n,iand the return value.

Hence the total memory requirement will be (4n + 12), which is increasing linearly with the increase in the input value n, hence it is called as Linear Space Complexity.

Similarly, we can have quadratic and other complex space complexity as well, as the complexity of an algorithm increases.

But we should always focus on writing algorithm code in such a way that we keep the space complexity minimum.

Time Complexity of Algorithms

For any defined problem, there can be N number of solution. This is true in general. If I have a problem and I discuss about the problem with all of my friends, they will all suggest me different solutions. And I am the one who has to decide which solution is the best based on the circumstances.

Similarly for any problem which must be solved using a program, there can be infinite number of solutions. Let's take a simple example to understand this. Below we have two different algorithms to find square of a number(for some time, forget that square of any number n is n*n):

One solution to this problem can be, running a loop for n times, starting with the number n and adding n to it, every time.

/*

we have to calculate the square of n

*/

for i=1 to n

do n = n + n

// when the loop ends n will hold its square

return nOr, we can simply use a mathematical operator * to find the square.

/*

we have to calculate the square of n

*/

return n*nIn the above two simple algorithms, you saw how a single problem can have many solutions. While the first solution required a loop which will execute for n number of times, the second solution used a mathematical operator * to return the result in one line. So which one is the better approach, of course the second one.

What is Time Complexity?

Time complexity of an algorithm signifies the total time required by the program to run till its completion.

The time complexity of algorithms is most commonly expressed using the big O notation. It's an asymptotic notation to represent the time complexity. We will study about it in detail in the next tutorial.

Time Complexity is most commonly estimated by counting the number of elementary steps performed by any algorithm to finish execution. Like in the example above, for the first code the loop will run n number of times, so the time complexity will be n atleast and as the value of n will increase the time taken will also increase. While for the second code, time complexity is constant, because it will never be dependent on the value of n, it will always give the result in 1 step.

And since the algorithm's performance may vary with different types of input data, hence for an algorithm we usually use the worst-case Time complexity of an algorithm because that is the maximum time taken for any input size.

Calculating Time Complexity

Now lets tap onto the next big topic related to Time complexity, which is How to Calculate Time Complexity. It becomes very confusing some times, but we will try to explain it in the simplest way.

Now the most common metric for calculating time complexity is Big O notation. This removes all constant factors so that the running time can be estimated in relation to N, as N approaches infinity. In general you can think of it like this :

statement;

Above we have a single statement. Its Time Complexity will be Constant. The running time of the statement will not change in relation to N.

for(i=0; i < N; i++)

{

statement;

}The time complexity for the above algorithm will be Linear. The running time of the loop is directly proportional to N. When N doubles, so does the running time.

for(i=0; i < N; i++)

{

for(j=0; j < N;j++)

{

statement;

}

}This time, the time complexity for the above code will be Quadratic. The running time of the two loops is proportional to the square of N. When N doubles, the running time increases by N * N.

while(low <= high)

{

mid = (low + high) / 2;

if (target < list[mid])

high = mid - 1;

else if (target > list[mid])

low = mid + 1;

else break;

}This is an algorithm to break a set of numbers into halves, to search a particular field(we will study this in detail later). Now, this algorithm will have a Logarithmic Time Complexity. The running time of the algorithm is proportional to the number of times N can be divided by 2(N is high-low here). This is because the algorithm divides the working area in half with each iteration.

void quicksort(int list[], int left, int right)

{

int pivot = partition(list, left, right);

quicksort(list, left, pivot - 1);

quicksort(list, pivot + 1, right);

}Taking the previous algorithm forward, above we have a small logic of Quick Sort(we will study this in detail later). Now in Quick Sort, we divide the list into halves every time, but we repeat the iteration N times(where N is the size of list). Hence time complexity will be N*log( N ). The running time consists of N loops (iterative or recursive) that are logarithmic, thus the algorithm is a combination of linear and logarithmic.

NOTE: In general, doing something with every item in one dimension is linear, doing something with every item in two dimensions is quadratic, and dividing the working area in half is logarithmic.

Types of Notations for Time Complexity

Now we will discuss and understand the various notations used for Time Complexity.- Big Oh denotes "fewer than or the same as" <expression> iterations.

- Big Omega denotes "more than or the same as" <expression> iterations.

- Big Theta denotes "the same as" <expression> iterations.

- Little Oh denotes "fewer than" <expression> iterations.

- Little Omega denotes "more than" <expression> iterations.

Understanding Notations of Time Complexity with Example

O(expression) is the set of functions that grow slower than or at the same rate as expression. It indicates the maximum required by an algorithm for all input values. It represents the worst case of an algorithm's time complexity.

Omega(expression) is the set of functions that grow faster than or at the same rate as expression. It indicates the minimum time required by an algorithm for all input values. It represents the best case of an algorithm's time complexity.

Theta(expression) consist of all the functions that lie in both O(expression) and Omega(expression). It indicates the average bound of an algorithm. It represents the average case of an algorithm's time complexity.

Suppose you've calculated that an algorithm takes f(n) operations, where,

f(n) = 3*n^2 + 2*n + 4. // n^2 means square of nSince this polynomial grows at the same rate as n2, then you could say that the function f lies in the set Theta(n2). (It also lies in the sets O(n2) and Omega(n2) for the same reason.)

The simplest explanation is, because Theta denotes the same as the expression. Hence, as f(n) grows by a factor of n2, the time complexity can be best represented as Theta(n2).

Introduction to Sorting

Sorting is nothing but arranging the data in ascending or descending order. The term sorting came into picture, as humans realised the importance of searching quickly.

There are so many things in our real life that we need to search for, like a particular record in database, roll numbers in merit list, a particular telephone number in telephone directory, a particular page in a book etc. All this would have been a mess if the data was kept unordered and unsorted, but fortunately the concept of sorting came into existence, making it easier for everyone to arrange data in an order, hence making it easier to search.

Sorting arranges data in a sequence which makes searching easier.

Sorting Efficiency

If you ask me, how will I arrange a deck of shuffled cards in order, I would say, I will start by checking every card, and making the deck as I move on.

It can take me hours to arrange the deck in order, but that's how I will do it.

Well, thank god, computers don't work like this.

Since the beginning of the programming age, computer scientists have been working on solving the problem of sorting by coming up with various different algorithms to sort data.

The two main criterias to judge which algorithm is better than the other have been:

- Time taken to sort the given data.

- Memory Space required to do so.

Different Sorting Algorithms

There are many different techniques available for sorting, differentiated by their efficiency and space requirements. Following are some sorting techniques which we will be covering in next few tutorials.

- Bubble Sort

- Insertion Sort

- Selection Sort

- Quick Sort

- Merge Sort

- Heap Sort

Although it's easier to understand these sorting techniques, but still we suggest you to first learn about Space complexity, Time complexity and the searching algorithms, to warm up your brain for sorting algorithms.

Bubble Sort Algorithm

Bubble Sort is a simple algorithm which is used to sort a given set of n elements provided in form of an array with n number of elements. Bubble Sort compares all the element one by one and sort them based on their values.

If the given array has to be sorted in ascending order, then bubble sort will start by comparing the first element of the array with the second element, if the first element is greater than the second element, it will swap both the elements, and then move on to compare the second and the third element, and so on.

If we have total n elements, then we need to repeat this process for n-1 times.

It is known as bubble sort, because with every complete iteration the largest element in the given array, bubbles up towards the last place or the highest index, just like a water bubble rises up to the water surface.

Sorting takes place by stepping through all the elements one-by-one and comparing it with the adjacent element and swapping them if required.

Implementing Bubble Sort Algorithm

Following are the steps involved in bubble sort(for sorting a given array in ascending order):

- Starting with the first element(index = 0), compare the current element with the next element of the array.

- If the current element is greater than the next element of the array, swap them.

- If the current element is less than the next element, move to the next element. Repeat Step 1.

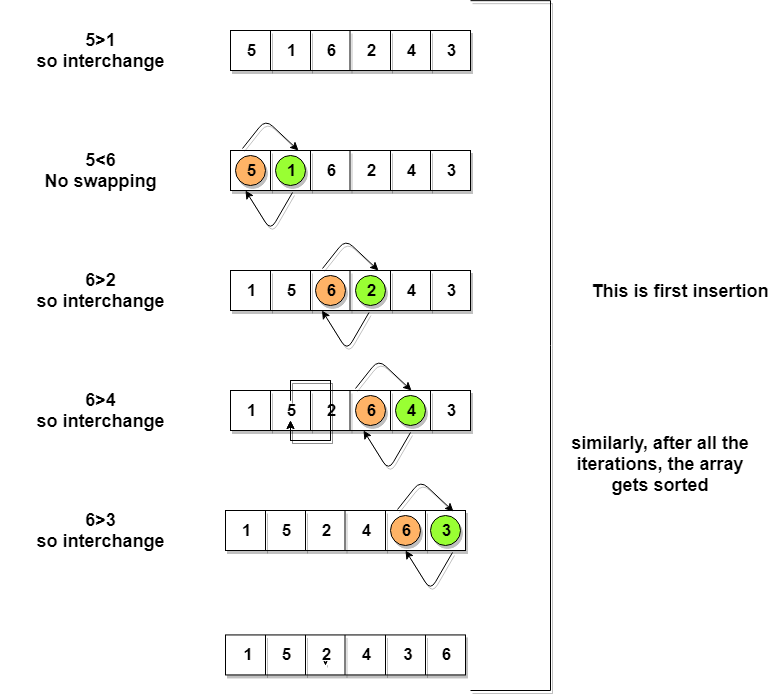

Let's consider an array with values {5, 1, 6, 2, 4, 3}

Below, we have a pictorial representation of how bubble sort will sort the given array.

So as we can see in the representation above, after the first iteration, 6 is placed at the last index, which is the correct position for it.

Similarly after the second iteration, 5 will be at the second last index, and so on.

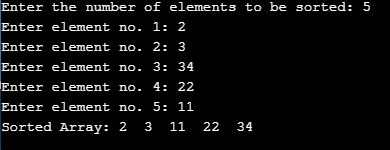

Time to write the code for bubble sort:

// below we have a simple C program for bubble sort

#include <stdio.h>

void bubbleSort(int arr[], int n)

{

int i, j, temp;

for(i = 0; i < n; i++)

{

for(j = 0; j < n-i-1; j++)

{

if( arr[j] > arr[j+1])

{

// swap the elements

temp = arr[j];

arr[j] = arr[j+1];

arr[j+1] = temp;

}

}

}

// print the sorted array

printf("Sorted Array: ");

for(i = 0; i < n; i++)

{

printf("%d ", arr[i]);

}

}

int main()

{

int arr[100], i, n, step, temp;

// ask user for number of elements to be sorted

printf("Enter the number of elements to be sorted: ");

scanf("%d", &n);

// input elements if the array

for(i = 0; i < n; i++)

{

printf("Enter element no. %d: ", i+1);

scanf("%d", &arr[i]);

}

// call the function bubbleSort

bubbleSort(arr, n);

return 0;

}Although the above logic will sort an unsorted array, still the above algorithm is not efficient because as per the above logic, the outer for loop will keep on executing for 6 iterations even if the array gets sorted after the second iteration.

So, we can clearly optimize our algorithm.

Optimized Bubble Sort Algorithm

To optimize our bubble sort algorithm, we can introduce a flag to monitor whether elements are getting swapped inside the inner for loop.

Hence, in the inner for loop, we check whether swapping of elements is taking place or not, everytime.

If for a particular iteration, no swapping took place, it means the array has been sorted and we can jump out of the for loop, instead of executing all the iterations.

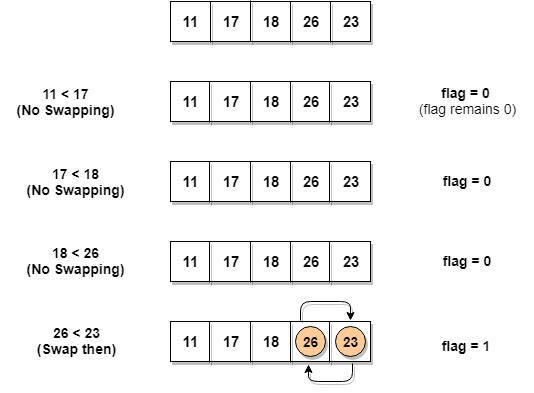

Let's consider an array with values {11, 17, 18, 26, 23}

Below, we have a pictorial representation of how the optimized bubble sort will sort the given array.

As we can see, in the first iteration, swapping took place, hence we updated our flag value to 1, as a result, the execution enters the for loop again. But in the second iteration, no swapping will occur, hence the value of flag will remain 0, and execution will break out of loop.

// below we have a simple C program for bubble sort

#include <stdio.h>

void bubbleSort(int arr[], int n)

{

int i, j, temp, flag=0;

for(i = 0; i < n; i++)

{

for(j = 0; j < n-i-1; j++)

{

// introducing a flag to monitor swapping

if( arr[j] > arr[j+1])

{

// swap the elements

temp = arr[j];

arr[j] = arr[j+1];

arr[j+1] = temp;

// if swapping happens update flag to 1

flag = 1;

}

}

// if value of flag is zero after all the iterations of inner loop

// then break out

if(flag==0)

{

break;

}

}

// print the sorted array

printf("Sorted Array: ");

for(i = 0; i < n; i++)

{

printf("%d ", arr[i]);

}

}

int main()

{

int arr[100], i, n, step, temp;

// ask user for number of elements to be sorted

printf("Enter the number of elements to be sorted: ");

scanf("%d", &n);

// input elements if the array

for(i = 0; i < n; i++)

{

printf("Enter element no. %d: ", i+1);

scanf("%d", &arr[i]);

}

// call the function bubbleSort

bubbleSort(arr, n);

return 0;

}

In the above code, in the function bubbleSort, if for a single complete cycle of j iteration(inner for loop), no swapping takes place, then flag will remain 0 and then we will break out of the for loops, because the array has already been sorted.

Complexity Analysis of Bubble Sort

In Bubble Sort, n-1 comparisons will be done in the 1st pass, n-2 in 2nd pass, n-3 in 3rd pass and so on. So the total number of comparisons will be,

(n-1) + (n-2) + (n-3) + ..... + 3 + 2 + 1 Sum = n(n-1)/2 i.e O(n2)

Hence the time complexity of Bubble Sort is O(n2).

The main advantage of Bubble Sort is the simplicity of the algorithm.

The space complexity for Bubble Sort is O(1), because only a single additional memory space is required i.e. for temp variable.

Also, the best case time complexity will be O(n), it is when the list is already sorted.

Following are the Time and Space complexity for the Bubble Sort algorithm.

- Worst Case Time Complexity [ Big-O ]: O(n2)

- Best Case Time Complexity [Big-omega]: O(n)

- Average Time Complexity [Big-theta]: O(n2)

- Space Complexity: O(1)

Selection Sort Algorithm

Selection sort is conceptually the most simplest sorting algorithm. This algorithm will first find the smallest element in the array and swap it with the element in the first position, then it will find the second smallest element and swap it with the element in the second position, and it will keep on doing this until the entire array is sorted.

It is called selection sort because it repeatedly selects the next-smallest element and swaps it into the right place.

How Selection Sort Works?

Following are the steps involved in selection sort(for sorting a given array in ascending order):

- Starting from the first element, we search the smallest element in the array, and replace it with the element in the first position.

- We then move on to the second position, and look for smallest element present in the subarray, starting from index

1, till the last index. - We replace the element at the second position in the original array, or we can say at the first position in the subarray, with the second smallest element.

- This is repeated, until the array is completely sorted.

Let's consider an array with values

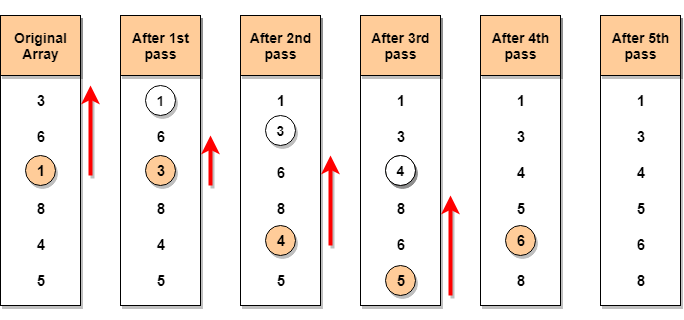

{3, 6, 1, 8, 4, 5}Below, we have a pictorial representation of how selection sort will sort the given array.

In the first pass, the smallest element will be

1, so it will be placed at the first position.Then leaving the first element, next smallest element will be searched, from the remaining elements. We will get

3as the smallest, so it will be then placed at the second position.Then leaving

1and3(because they are at the correct position), we will search for the next smallest element from the rest of the elements and put it at third position and keep doing this until array is sorted.Finding Smallest Element in a subarray

In selection sort, in the first step, we look for the smallest element in the array and replace it with the element at the first position. This seems doable, isn't it?

Consider that you have an array with following values

{3, 6, 1, 8, 4, 5}. Now as per selection sort, we will start from the first element and look for the smallest number in the array, which is1and we will find it at the index2. Once the smallest number is found, it is swapped with the element at the first position.Well, in the next iteration, we will have to look for the second smallest number in the array. How can we find the second smallest number? This one is tricky?

If you look closely, we already have the smallest number/element at the first position, which is the right position for it and we do not have to move it anywhere now. So we can say, that the first element is sorted, but the elements to the right, starting from index

1are not.So, we will now look for the smallest element in the subarray, starting from index

1, to the last index.Confused? Give it time to sink in.

After we have found the second smallest element and replaced it with element on index

1(which is the second position in the array), we will have the first two positions of the array sorted.Then we will work on the subarray, starting from index

2now, and again looking for the smallest element in this subarray.Implementing Selection Sort Algorithm

In the C program below, we have tried to divide the program into small functions, so that it's easier fo you to understand which part is doing what.

There are many different ways to implement selection sort algorithm, here is the one that we like:

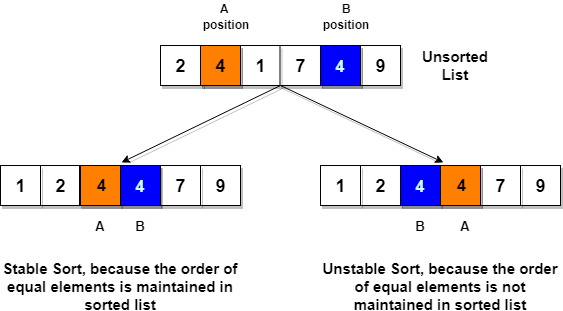

// C program implementing Selection Sort # include <stdio.h> // function to swap elements at the given index values void swap(int arr[], int firstIndex, int secondIndex) { int temp; temp = arr[firstIndex]; arr[firstIndex] = arr[secondIndex]; arr[secondIndex] = temp; } // function to look for smallest element in the given subarray int indexOfMinimum(int arr[], int startIndex, int n) { int minValue = arr[startIndex]; int minIndex = startIndex; for(int i = minIndex + 1; i < n; i++) { if(arr[i] < minValue) { minIndex = i; minValue = arr[i]; } } return minIndex; } void selectionSort(int arr[], int n) { for(int i = 0; i < n; i++) { int index = indexOfMinimum(arr, i, n); swap(arr, i, index); } } void printArray(int arr[], int size) { int i; for(i = 0; i < size; i++) { printf("%d ", arr[i]); } printf("\n"); } int main() { int arr[] = {46, 52, 21, 22, 11}; int n = sizeof(arr)/sizeof(arr[0]); selectionSort(arr, n); printf("Sorted array: \n"); printArray(arr, n); return 0; }Note: Selection sort is an unstable sort i.e it might change the occurrence of two similar elements in the list while sorting. But it can also work as a stable sort when it is implemented using linked list.

Complexity Analysis of Selection Sort

Selection Sort requires two nested

forloops to complete itself, oneforloop is in the functionselectionSort, and inside the first loop we are making a call to another functionindexOfMinimum, which has the second(inner)forloop.Hence for a given input size of

n, following will be the time and space complexity for selection sort algorithm:Worst Case Time Complexity [ Big-O ]: O(n2)

Best Case Time Complexity [Big-omega]: O(n2)

Average Time Complexity [Big-theta]: O(n2)

Space Complexity: O(1)

Insertion Sort Algorithm

Consider you have 10 cards out of a deck of cards in your hand. And they are sorted, or arranged in the ascending order of their numbers.

If I give you another card, and ask you to insert the card in just the right position, so that the cards in your hand are still sorted. What will you do?

Well, you will have to go through each card from the starting or the back and find the right position for the new card, comparing it's value with each card. Once you find the right position, you will insert the card there.

Similarly, if more new cards are provided to you, you can easily repeat the same process and insert the new cards and keep the cards sorted too.

This is exactly how insertion sort works. It starts from the index

1(not0), and each index starting from index1is like a new card, that you have to place at the right position in the sorted subarray on the left.Following are some of the important characteristics of Insertion Sort:

- It is efficient for smaller data sets, but very inefficient for larger lists.

- Insertion Sort is adaptive, that means it reduces its total number of steps if a partially sorted array is provided as input, making it efficient.

- It is better than Selection Sort and Bubble Sort algorithms.

- Its space complexity is less. Like bubble Sort, insertion sort also requires a single additional memory space.

- It is a stable sorting technique, as it does not change the relative order of elements which are equal.

How Insertion Sort Works?

Following are the steps involved in insertion sort:

- We start by making the second element of the given array, i.e. element at index

1, thekey. Thekeyelement here is the new card that we need to add to our existing sorted set of cards(remember the example with cards above). - We compare the

keyelement with the element(s) before it, in this case, element at index0:- If the

keyelement is less than the first element, we insert thekeyelement before the first element. - If the

keyelement is greater than the first element, then we insert it after the first element.

- If the

- Then, we make the third element of the array as

keyand will compare it with elements to it's left and insert it at the right position. - And we go on repeating this, until the array is sorted.

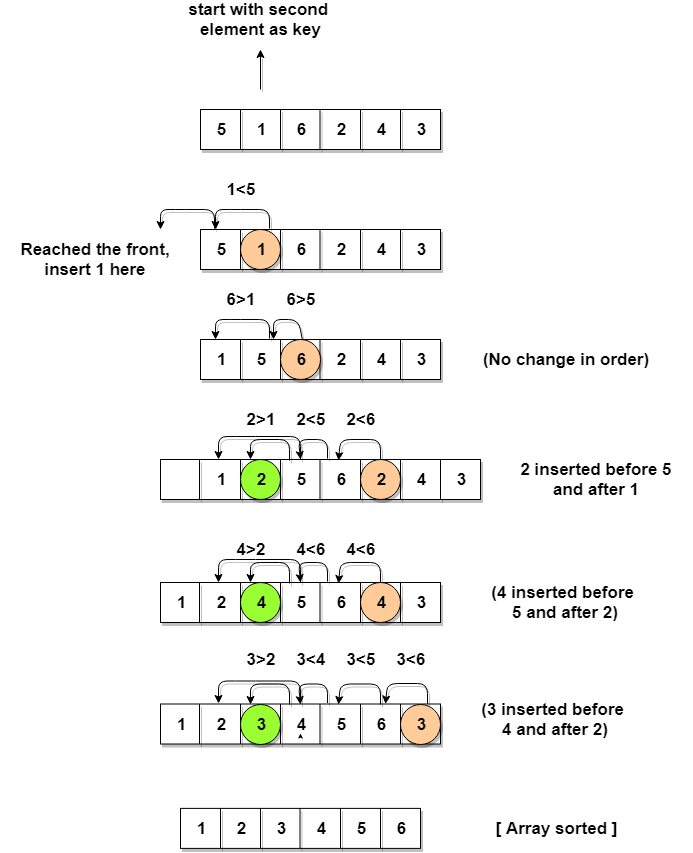

Let's consider an array with values

{5, 1, 6, 2, 4, 3}Below, we have a pictorial representation of how bubble sort will sort the given array.

As you can see in the diagram above, after picking a

key, we start iterating over the elements to the left of thekey.We continue to move towards left if the elements are greater than the

keyelement and stop when we find the element which is less than thekeyelement.And, insert the

keyelement after the element which is less than thekeyelement.Implementing Insertion Sort Algorithm

Below we have a simple implementation of Insertion sort in C++ language.

#include <stdlib.h> #include <iostream> using namespace std; //member functions declaration void insertionSort(int arr[], int length); void printArray(int array[], int size); // main function int main() { int array[5] = {5, 1, 6, 2, 4, 3}; // calling insertion sort function to sort the array insertionSort(array, 6); return 0; } void insertionSort(int arr[], int length) { int i, j, key; for (i = 1; i < length; i++) { j = i; while (j > 0 && arr[j - 1] > arr[j]) { key = arr[j]; arr[j] = arr[j - 1]; arr[j - 1] = key; j--; } } cout << "Sorted Array: "; // print the sorted array printArray(arr, length); } // function to print the given array void printArray(int array[], int size) { int j; for (j = 0; j < size; j++) { cout <<" "<< array[j]; } cout << endl; }Sorted Array: 1 2 3 4 5 6

Now let's try to understand the above simple insertion sort algorithm.

We took an array with 6 integers. We took a variable

key, in which we put each element of the array, during each pass, starting from the second element, that isa[1].Then using the

whileloop, we iterate, untiljbecomes equal to zero or we find an element which is greater thankey, and then we insert thekeyat that position.We keep on doing this, until

jbecomes equal to zero, or we encounter an element which is smaller than thekey, and then we stop. The currentkeyis now at the right position.We then make the next element as

keyand then repeat the same process.In the above array, first we pick 1 as

key, we compare it with 5(element before 1), 1 is smaller than 5, we insert 1 before 5. Then we pick 6 askey, and compare it with 5 and 1, no shifting in position this time. Then 2 becomes thekeyand is compared with 6 and 5, and then 2 is inserted after 1. And this goes on until the complete array gets sorted.Complexity Analysis of Insertion Sort

As we mentioned above that insertion sort is an efficient sorting algorithm, as it does not run on preset conditions using

forloops, but instead it uses onewhileloop, which avoids extra steps once the array gets sorted.Even though insertion sort is efficient, still, if we provide an already sorted array to the insertion sort algorithm, it will still execute the outer

forloop, thereby requiringnsteps to sort an already sorted array ofnelements, which makes its best case time complexity a linear function ofn.Worst Case Time Complexity [ Big-O ]: O(n2)

Best Case Time Complexity [Big-omega]: O(n)

Average Time Complexity [Big-theta]: O(n2)

Space Complexity: O(1)

Merge Sort Algorithm

Merge Sort follows the rule of Divide and Conquer to sort a given set of numbers/elements, recursively, hence consuming less time.

In the last two tutorials, we learned about Selection Sort and Insertion Sort, both of which have a worst-case running time of

O(n2). As the size of input grows, insertion and selection sort can take a long time to run.Merge sort , on the other hand, runs in

O(n*log n)time in all the cases.Before jumping on to, how merge sort works and it's implementation, first lets understand what is the rule of Divide and Conquer?

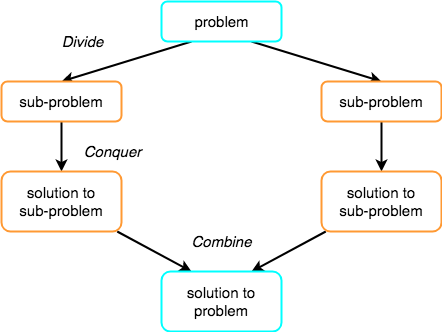

Divide and Conquer

If we can break a single big problem into smaller sub-problems, solve the smaller sub-problems and combine their solutions to find the solution for the original big problem, it becomes easier to solve the whole problem.

Let's take an example, Divide and Rule.

When Britishers came to India, they saw a country with different religions living in harmony, hard working but naive citizens, unity in diversity, and found it difficult to establish their empire. So, they adopted the policy of Divide and Rule. Where the population of India was collectively a one big problem for them, they divided the problem into smaller problems, by instigating rivalries between local kings, making them stand against each other, and this worked very well for them.

Well that was history, and a socio-political policy (Divide and Rule), but the idea here is, if we can somehow divide a problem into smaller sub-problems, it becomes easier to eventually solve the whole problem.

In Merge Sort, the given unsorted array with

nelements, is divided intonsubarrays, each having one element, because a single element is always sorted in itself. Then, it repeatedly merges these subarrays, to produce new sorted subarrays, and in the end, one complete sorted array is produced.The concept of Divide and Conquer involves three steps:

- Divide the problem into multiple small problems.

- Conquer the subproblems by solving them. The idea is to break down the problem into atomic subproblems, where they are actually solved.

- Combine the solutions of the subproblems to find the solution of the actual problem.

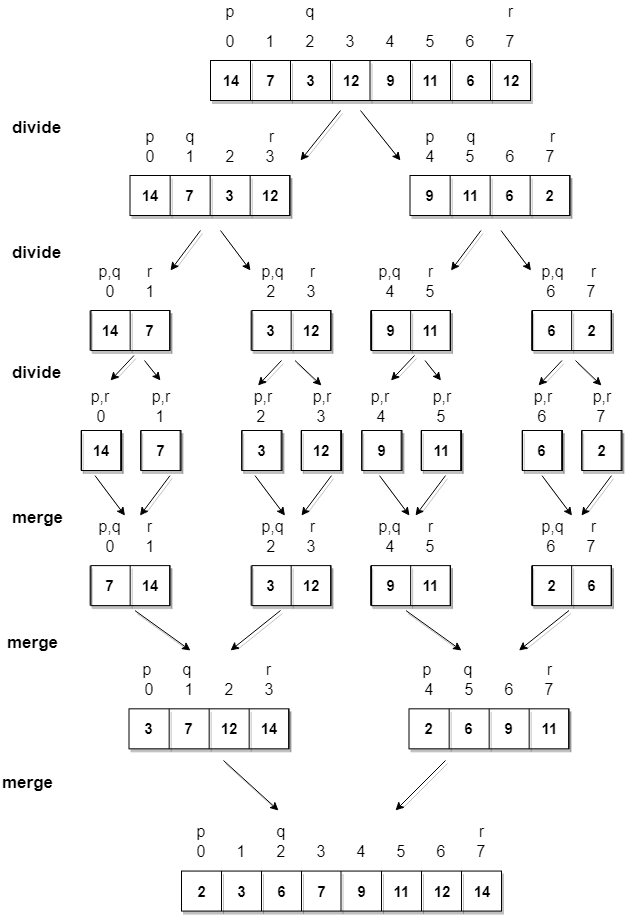

How Merge Sort Works?

As we have already discussed that merge sort utilizes divide-and-conquer rule to break the problem into sub-problems, the problem in this case being, sorting a given array.

In merge sort, we break the given array midway, for example if the original array had

6elements, then merge sort will break it down into two subarrays with3elements each.But breaking the orignal array into 2 smaller subarrays is not helping us in sorting the array.

So we will break these subarrays into even smaller subarrays, until we have multiple subarrays with single element in them. Now, the idea here is that an array with a single element is already sorted, so once we break the original array into subarrays which has only a single element, we have successfully broken down our problem into base problems.

And then we have to merge all these sorted subarrays, step by step to form one single sorted array.

Let's consider an array with values

{14, 7, 3, 12, 9, 11, 6, 12}Below, we have a pictorial representation of how merge sort will sort the given array.

In merge sort we follow the following steps:

- We take a variable

pand store the starting index of our array in this. And we take another variablerand store the last index of array in it. - Then we find the middle of the array using the formula

(p + r)/2and mark the middle index asq, and break the array into two subarrays, fromptoqand fromq + 1torindex. - Then we divide these 2 subarrays again, just like we divided our main array and this continues.

- Once we have divided the main array into subarrays with single elements, then we start merging the subarrays.

Implementing Merge Sort Algorithm

Below we have a C program implementing merge sort algorithm.

/* a[] is the array, p is starting index, that is 0, and r is the last index of array. */ #include <stdio.h> // lets take a[5] = {32, 45, 67, 2, 7} as the array to be sorted. // merge sort function void mergeSort(int a[], int p, int r) { int q; if(p < r) { q = (p + r) / 2; mergeSort(a, p, q); mergeSort(a, q+1, r); merge(a, p, q, r); } } // function to merge the subarrays void merge(int a[], int p, int q, int r) { int b[5]; //same size of a[] int i, j, k; k = 0; i = p; j = q + 1; while(i <= q && j <= r) { if(a[i] < a[j]) { b[k++] = a[i++]; // same as b[k]=a[i]; k++; i++; } else { b[k++] = a[j++]; } } while(i <= q) { b[k++] = a[i++]; } while(j <= r) { b[k++] = a[j++]; } for(i=r; i >= p; i--) { a[i] = b[--k]; // copying back the sorted list to a[] } } // function to print the array void printArray(int a[], int size) { int i; for (i=0; i < size; i++) { printf("%d ", a[i]); } printf("\n"); } int main() { int arr[] = {32, 45, 67, 2, 7}; int len = sizeof(arr)/sizeof(arr[0]); printf("Given array: \n"); printArray(arr, len); // calling merge sort mergeSort(arr, 0, len - 1); printf("\nSorted array: \n"); printArray(arr, len); return 0; }Given array: 32 45 67 2 7 Sorted array: 2 7 32 45 67

Complexity Analysis of Merge Sort

Merge Sort is quite fast, and has a time complexity of

O(n*log n). It is also a stable sort, which means the "equal" elements are ordered in the same order in the sorted list.In this section we will understand why the running time for merge sort is

O(n*log n).As we have already learned in Binary Search that whenever we divide a number into half in every stpe, it can be represented using a logarithmic function, which is

log nand the number of steps can be represented bylog n + 1(at most)Also, we perform a single step operation to find out the middle of any subarray, i.e.

O(1).And to merge the subarrays, made by dividing the original array of

nelements, a running time ofO(n)will be required.Hence the total time for

mergeSortfunction will becomen(log n + 1), which gives us a time complexity ofO(n*log n).Worst Case Time Complexity [ Big-O ]: O(n*log n)

Best Case Time Complexity [Big-omega]: O(n*log n)

Average Time Complexity [Big-theta]: O(n*log n)

Space Complexity: O(n)

- Time complexity of Merge Sort is

O(n*Log n)in all the 3 cases (worst, average and best) as merge sort always divides the array in two halves and takes linear time to merge two halves. - It requires equal amount of additional space as the unsorted array. Hence its not at all recommended for searching large unsorted arrays.

- It is the best Sorting technique used for sorting Linked Lists.

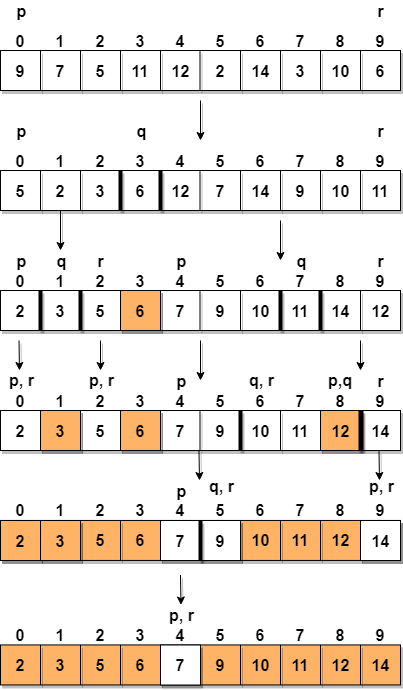

Quick Sort Algorithm

Quick Sort is also based on the concept of Divide and Conquer, just like merge sort. But in quick sort all the heavy lifting(major work) is done while dividing the array into subarrays, while in case of merge sort, all the real work happens during merging the subarrays. In case of quick sort, the combine step does absolutely nothing.

It is also called partition-exchange sort. This algorithm divides the list into three main parts:

- Elements less than the Pivot element

- Pivot element(Central element)

- Elements greater than the pivot element

Pivot element can be any element from the array, it can be the first element, the last element or any random element. In this tutorial, we will take the rightmost element or the last element as pivot.

For example: In the array

{52, 37, 63, 14, 17, 8, 6, 25}, we take25as pivot. So after the first pass, the list will be changed like this.{

6 8 17 142563 37 52}Hence after the first pass, pivot will be set at its position, with all the elements smaller to it on its left and all the elements larger than to its right. Now

6 8 17 14and63 37 52are considered as two separate sunarrays, and same recursive logic will be applied on them, and we will keep doing this until the complete array is sorted.How Quick Sorting Works?

Following are the steps involved in quick sort algorithm:

- After selecting an element as pivot, which is the last index of the array in our case, we divide the array for the first time.

- In quick sort, we call this partitioning. It is not simple breaking down of array into 2 subarrays, but in case of partitioning, the array elements are so positioned that all the elements smaller than the pivot will be on the left side of the pivot and all the elements greater than the pivot will be on the right side of it.

- And the pivot element will be at its final sorted position.

- The elements to the left and right, may not be sorted.

- Then we pick subarrays, elements on the left of pivot and elements on the right of pivot, and we perform partitioning on them by choosing a pivot in the subarrays.

Let's consider an array with values

{9, 7, 5, 11, 12, 2, 14, 3, 10, 6}Below, we have a pictorial representation of how quick sort will sort the given array.

In step 1, we select the last element as the pivot, which is

6in this case, and call forpartitioning, hence re-arranging the array in such a way that6will be placed in its final position and to its left will be all the elements less than it and to its right, we will have all the elements greater than it.Then we pick the subarray on the left and the subarray on the right and select a pivot for them, in the above diagram, we chose

3as pivot for the left subarray and11as pivot for the right subarray.And we again call for

partitioning.Implementing Quick Sort Algorithm

Below we have a simple C program implementing the Quick sort algorithm:

// simple C program for Quick Sort #include <stdio.h> int partition(int a[], int beg, int end); void quickSort(int a[], int beg, int end); void main() { int i; int arr[10]={90,23,101,45,65,28,67,89,34,29}; quickSort(arr, 0, 9); printf("\n The sorted array is: \n"); for(i=0;i<10;i++) printf(" %d\t", arr[i]); } int partition(int a[], int beg, int end) { int left, right, temp, loc, flag; loc = left = beg; right = end; flag = 0; while(flag != 1) { while((a[loc] <= a[right]) && (loc!=right)) right--; if(loc==right) flag =1; else if(a[loc]>a[right]) { temp = a[loc]; a[loc] = a[right]; a[right] = temp; loc = right; } if(flag!=1) { while((a[loc] >= a[left]) && (loc!=left)) left++; if(loc==left) flag =1; else if(a[loc] < a[left]) { temp = a[loc]; a[loc] = a[left]; a[left] = temp; loc = left; } } } return loc; } void quickSort(int a[], int beg, int end) { int loc; if(beg<end) { loc = partition(a, beg, end); quickSort(a, beg, loc-1); quickSort(a, loc+1, end); } }Complexity Analysis of Quick Sort

For an array, in which partitioning leads to unbalanced subarrays, to an extent where on the left side there are no elements, with all the elements greater than the pivot, hence on the right side.

And if keep on getting unbalanced subarrays, then the running time is the worst case, which is

O(n2)Where as if partitioning leads to almost equal subarrays, then the running time is the best, with time complexity as O(n*log n).

Worst Case Time Complexity [ Big-O ]: O(n2)

Best Case Time Complexity [Big-omega]: O(n*log n)

Average Time Complexity [Big-theta]: O(n*log n)

Space Complexity: O(n*log n)

As we know now, that if subarrays partitioning produced after partitioning are unbalanced, quick sort will take more time to finish. If someone knows that you pick the last index as pivot all the time, they can intentionally provide you with array which will result in worst-case running time for quick sort.

To avoid this, you can pick random pivot element too. It won't make any difference in the algorithm, as all you need to do is, pick a random element from the array, swap it with element at the last index, make it the pivot and carry on with quick sort.

- Space required by quick sort is very less, only

O(n*log n)additional space is required. - Quick sort is not a stable sorting technique, so it might change the occurence of two similar elements in the list while sorting.

Heap Sort Algorithm

Heap Sort is one of the best sorting methods being in-place and with no quadratic worst-case running time. Heap sort involves building a Heap data structure from the given array and then utilizing the Heap to sort the array.

You must be wondering, how converting an array of numbers into a heap data structure will help in sorting the array. To understand this, let's start by understanding what is a Heap.

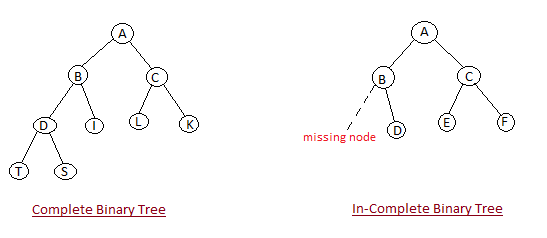

What is a Heap ?

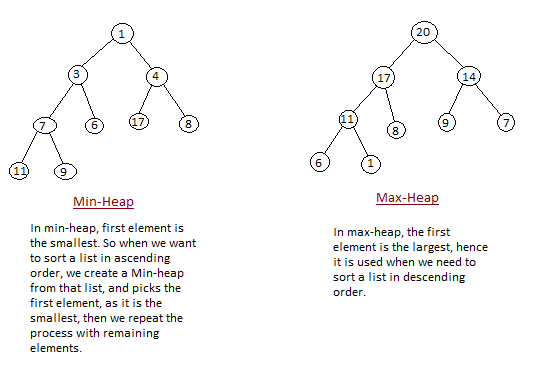

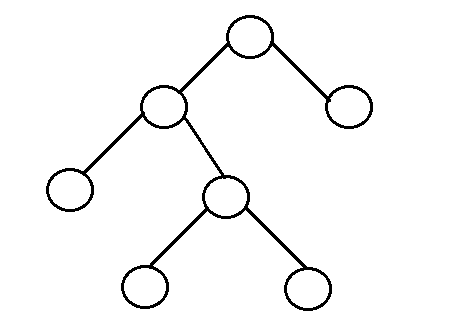

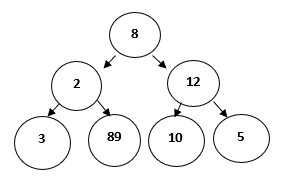

Heap is a special tree-based data structure, that satisfies the following special heap properties:

- Shape Property: Heap data structure is always a Complete Binary Tree, which means all levels of the tree are fully filled.

- Heap Property: All nodes are either greater than or equal to or less than or equal to each of its children. If the parent nodes are greater than their child nodes, heap is called a Max-Heap, and if the parent nodes are smaller than their child nodes, heap is called Min-Heap.

How Heap Sort Works?

Heap sort algorithm is divided into two basic parts:

- Creating a Heap of the unsorted list/array.

- Then a sorted array is created by repeatedly removing the largest/smallest element from the heap, and inserting it into the array. The heap is reconstructed after each removal.

Initially on receiving an unsorted list, the first step in heap sort is to create a Heap data structure(Max-Heap or Min-Heap). Once heap is built, the first element of the Heap is either largest or smallest(depending upon Max-Heap or Min-Heap), so we put the first element of the heap in our array. Then we again make heap using the remaining elements, to again pick the first element of the heap and put it into the array. We keep on doing the same repeatedly untill we have the complete sorted list in our array.

In the below algorithm, initially

heapsort()function is called, which callsheapify()to build the heap.Implementing Heap Sort Algorithm

Below we have a simple C++ program implementing the Heap sort algorithm.

/* Below program is written in C++ language */ #include <iostream> using namespace std; void heapify(int arr[], int n, int i) { int largest = i; int l = 2*i + 1; int r = 2*i + 2; // if left child is larger than root if (l < n && arr[l] > arr[largest]) largest = l; // if right child is larger than largest so far if (r < n && arr[r] > arr[largest]) largest = r; // if largest is not root if (largest != i) { swap(arr[i], arr[largest]); // recursively heapify the affected sub-tree heapify(arr, n, largest); } } void heapSort(int arr[], int n) { // build heap (rearrange array) for (int i = n / 2 - 1; i >= 0; i--) heapify(arr, n, i); // one by one extract an element from heap for (int i=n-1; i>=0; i--) { // move current root to end swap(arr[0], arr[i]); // call max heapify on the reduced heap heapify(arr, i, 0); } } /* function to print array of size n */ void printArray(int arr[], int n) { for (int i = 0; i < n; i++) { cout << arr[i] << " "; } cout << "\n"; } int main() { int arr[] = {121, 10, 130, 57, 36, 17}; int n = sizeof(arr)/sizeof(arr[0]); heapSort(arr, n); cout << "Sorted array is \n"; printArray(arr, n); }Complexity Analysis of Heap Sort

Worst Case Time Complexity: O(n*log n)

Best Case Time Complexity: O(n*log n)

Average Time Complexity: O(n*log n)

Space Complexity : O(1)

- Heap sort is not a Stable sort, and requires a constant space for sorting a list.

- Heap Sort is very fast and is widely used for sorting.

- Shape Property: Heap data structure is always a Complete Binary Tree, which means all levels of the tree are fully filled.

Counting Sort Algorithm

Counting Sort Algorithm is an efficient sorting algorithm that can be used for sorting elements within a specific range. This sorting technique is based on the frequency/count of each element to be sorted and works using the following algorithm-

- Input: Unsorted array A[] of n elements

- Output: Sorted arrayB[]

Step 1: Consider an input array A having n elements in the range of 0 to k, where n and k are positive integer numbers. These n elements have to be sorted in ascending order using the counting sort technique. Also note that A[] can have distinct or duplicate elements

Step 2: The count/frequency of each distinct element in A is computed and stored in another array, say count, of size k+1. Let u be an element in A such that its frequency is stored at count[u].

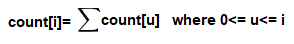

Step 3: Update the count array so that element at each index, say i, is equal to -

Step 4: The updated count array gives the index of each element of array A in the sorted sequence. Assume that the sorted sequence is stored in an output array, say B, of size n.

Step 5: Add each element from input array A to B as follows:

- Set i=0 and

t = A[i] - Add t to B[v] such that

v = (count[t]-1). - Decrement count[t] by 1

- Increment i by 1

Repeat steps (a) to (d) till i = n-1

Step 6: Display B since this is the sorted array

Pictorial Representation of Counting Sort with an Example

Let us trace the above algorithm using an example:

Consider the following input array A to be sorted. All the elements are in range 0 to 9

A[]= {1, 3, 2, 8, 5, 1, 5, 1, 2, 7}Step 1: Initialize an auxiliary array, say count and store the frequency of every distinct element. Size of count is 10 (k+1, such that range of elements in A is 0 to k)

Figure 1: count array

Step 2: Using the formula, updated count array is -

Figure 2: Formula for updating count array

Figure 3 : Updated count array

Step 3: Add elements of array A to resultant array B using the following steps:

- For, i=0, t=1, count[1]=3, v=2. After adding 1 to B[2], count[1]=2 and i=1

Figure 4: For i=0

- For i=1, t=3, count[3]=6, v=5. After adding 3 to B[5], count[3]=5 and i=2

Figure 5: For i=1

- For i=2, t=2, count[2]= 5, v=4. After adding 2 to B[4], count[2]=4 and i=3

Figure 6: For i=2

- For i=3, t=8, count[8]= 10, v=9. After adding 8 to B[9], count[8]=9 and i=4

Figure 7: For i=3

- On similar lines, we have the following:

Figure 8: For i=4

Figure 9: For i=5

Figure 10: For i=6

Figure 11: For i=7

Figure 12: For i=8

Figure 13: For i=9

Thus, array

Bhas the sorted list of elements.Program for Counting Sort Algorithm

Below we have a simple program in C++ implementing the counting sort algorithm:

#include<iostream> using namespace std; int k=0; // for storing the maximum element of input array /* Method to sort the array */ void sort_func(int A[],int B[],int n) { int count[k+1],t; for(int i=0;i<=k;i++) { //Initialize array count count[i] = 0; } for(int i=0;i<n;i++) { // count the occurrence of elements u of A // & increment count[u] where u=A[i] t = A[i]; count[t]++; } for(int i=1;i<=k;i++) { // Updating elements of count array count[i] = count[i]+count[i-1]; } for(int i=0;i<n;i++) { // Add elements of array A to array B t = A[i]; B[count[t]] = t; // Decrement the count value by 1 count[t]=count[t]-1; } } int main() { int n; cout<<"Enter the size of the array :"; cin>>n; // A is the input array and will store elements entered by the user // B is the output array having the sorted elements int A[n],B[n]; cout<<"Enter the array elements: "; for(int i=0;i<n;i++) { cin>>A[i]; if(A[i]>k) { // k will have the maximum element of A[] k = A[i]; } } sort_func(A,B,n); // Printing the elements of array B for(int i=1;i<=n;i++) { cout<<B[i]<<" "; } cout<<"\n"; return 0; }The input array is the same as that used in the example:

Figure 14: Output of Program

Note: The algorithm can be mapped to any programming language as per the requirement.

Time Complexity Analysis

For scanning the input array elements, the loop iterates n times, thus taking

O(n)running time. The sorted array B[] also gets computed in n iterations, thus requiring O(n) running time. The count array also uses k iterations, thus has a running time of O(k). Thus the total running time for counting sort algorithm isO(n+k).Key Points:

- The above implementation of Counting Sort can also be extended to sort negative input numbers

- Since counting sort is suitable for sorting numbers that belong to a well-defined, finite and small range, it can be used asa subprogram in other sorting algorithms like radix sort which can be used for sorting numbers having a large range

- Counting Sort algorithm is efficient if the range of input data (k) is not much greater than the number of elements in the input array (n). It will not work if we have 5 elements to sort in the range of 0 to 10,000

- It is an integer-based sorting algorithm unlike others which are usually comparison-based. A comparison-based sorting algorithm sorts numbers only by comparing pairs of numbers. Few examples of comparison based sorting algorithms are quick sort, merge sort, bubble sort, selection sort, heap sort, insertion sort, whereas algorithms like radix sort, bucket sort and comparison sort fall into the category of non-comparison based sorting algorithms.

Advantages of Counting Sort:

- It is quite fast

- It is a stable algorithm

Note: For a sorting algorithm to be stable, the order of elements with equal keys (values) in the sorted array should be the same as that of the input array.

Disadvantages of Counting Sort:

- It is not suitable for sorting large data sets

- It is not suitable for sorting string values

What is Stack Data Structure?

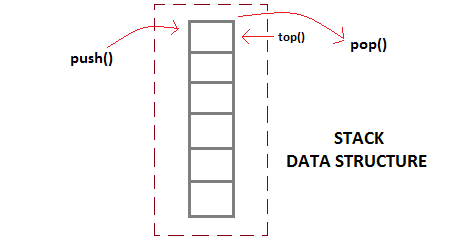

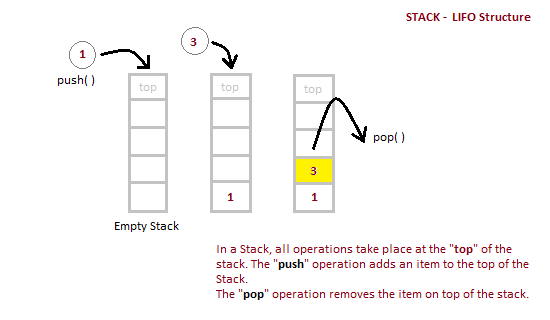

Stack is an abstract data type with a bounded(predefined) capacity. It is a simple data structure that allows adding and removing elements in a particular order. Every time an element is added, it goes on the top of the stack and the only element that can be removed is the element that is at the top of the stack, just like a pile of objects.

Basic features of Stack

- Stack is an ordered list of similar data type.

- Stack is a LIFO(Last in First out) structure or we can say FILO(First in Last out).

push()function is used to insert new elements into the Stack andpop()function is used to remove an element from the stack. Both insertion and removal are allowed at only one end of Stack called Top.- Stack is said to be in Overflow state when it is completely full and is said to be in Underflow state if it is completely empty.

Applications of Stack

The simplest application of a stack is to reverse a word. You push a given word to stack - letter by letter - and then pop letters from the stack.

There are other uses also like:

- Parsing

- Expression Conversion(Infix to Postfix, Postfix to Prefix etc)

Implementation of Stack Data Structure

Stack can be easily implemented using an Array or a Linked List. Arrays are quick, but are limited in size and Linked List requires overhead to allocate, link, unlink, and deallocate, but is not limited in size. Here we will implement Stack using array.

Algorithm for PUSH operation

- Check if the stack is full or not.

- If the stack is full, then print error of overflow and exit the program.

- If the stack is not full, then increment the top and add the element.

Algorithm for POP operation

- Check if the stack is empty or not.

- If the stack is empty, then print error of underflow and exit the program.

- If the stack is not empty, then print the element at the top and decrement the top.

Below we have a simple C++ program implementing stack data structure while following the object oriented programming concepts.

/* Below program is written in C++ language */ # include<iostream> using namespace std; class Stack { int top; public: int a[10]; //Maximum size of Stack Stack() { top = -1; } // declaring all the function void push(int x); int pop(); void isEmpty(); }; // function to insert data into stack void Stack::push(int x) { if(top >= 10) { cout << "Stack Overflow \n"; } else { a[++top] = x; cout << "Element Inserted \n"; } } // function to remove data from the top of the stack int Stack::pop() { if(top < 0) { cout << "Stack Underflow \n"; return 0; } else { int d = a[top--]; return d; } } // function to check if stack is empty void Stack::isEmpty() { if(top < 0) { cout << "Stack is empty \n"; } else { cout << "Stack is not empty \n"; } } // main function int main() { Stack s1; s1.push(10); s1.push(100); /* preform whatever operation you want on the stack */ }Position of TopStatus of Stack-1Stack is Empty 0Only one element in Stack N-1Stack is Full NOverflow state of Stack Analysis of Stack Operations

Below mentioned are the time complexities for various operations that can be performed on the Stack data structure.

- Push Operation : O(1)

- Pop Operation : O(1)

- Top Operation : O(1)

- Search Operation : O(n)

The time complexities for

push()andpop()functions areO(1)because we always have to insert or remove the data from the top of the stack, which is a one step process.- Like stack, queue is also an ordered list of elements of similar data types.

- Queue is a FIFO( First in First Out ) structure.

- Once a new element is inserted into the Queue, all the elements inserted before the new element in the queue must be removed, to remove the new element.

peek( )function is oftenly used to return the value of first element without dequeuing it.- Serving requests on a single shared resource, like a printer, CPU task scheduling etc.

- In real life scenario, Call Center phone systems uses Queues to hold people calling them in an order, until a service representative is free.

- Handling of interrupts in real-time systems. The interrupts are handled in the same order as they arrive i.e First come first served.

- Check if the queue is full or not.

- If the queue is full, then print overflow error and exit the program.

- If the queue is not full, then increment the tail and add the element.

- Check if the queue is empty or not.

- If the queue is empty, then print underflow error and exit the program.

- If the queue is not empty, then print the element at the head and increment the head.

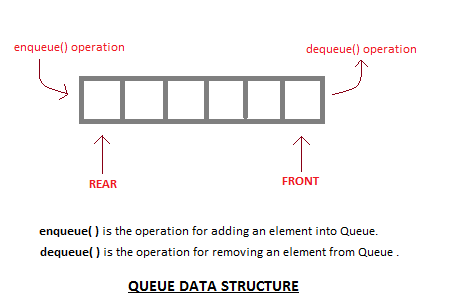

What is a Queue Data Structure?

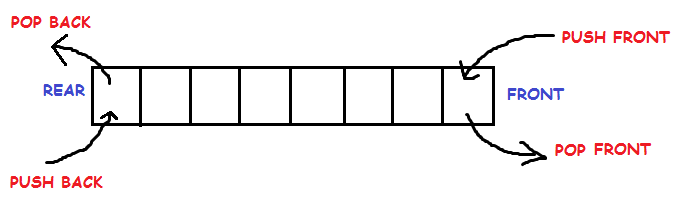

Queue is also an abstract data type or a linear data structure, just like stack data structure, in which the first element is inserted from one end called the REAR(also called tail), and the removal of existing element takes place from the other end called as FRONT(also called head).

This makes queue as FIFO(First in First Out) data structure, which means that element inserted first will be removed first.

Which is exactly how queue system works in real world. If you go to a ticket counter to buy movie tickets, and are first in the queue, then you will be the first one to get the tickets. Right? Same is the case with Queue data structure. Data inserted first, will leave the queue first.

The process to add an element into queue is called Enqueue and the process of removal of an element from queue is called Dequeue.

Basic features of Queue

Applications of Queue

Queue, as the name suggests is used whenever we need to manage any group of objects in an order in which the first one coming in, also gets out first while the others wait for their turn, like in the following scenarios:

Implementation of Queue Data Structure

Queue can be implemented using an Array, Stack or Linked List. The easiest way of implementing a queue is by using an Array.

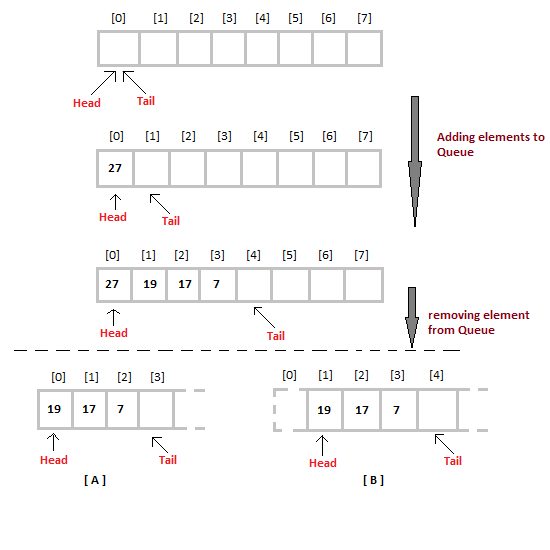

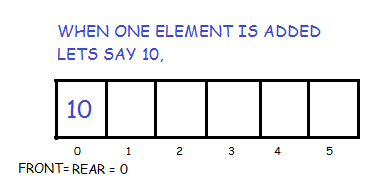

Initially the head(FRONT) and the tail(REAR) of the queue points at the first index of the array (starting the index of array from 0). As we add elements to the queue, the tail keeps on moving ahead, always pointing to the position where the next element will be inserted, while the head remains at the first index.

When we remove an element from Queue, we can follow two possible approaches (mentioned [A] and [B] in above diagram). In [A] approach, we remove the element at head position, and then one by one shift all the other elements in forward position.

In approach [B] we remove the element from head position and then move head to the next position.

In approach [A] there is an overhead of shifting the elements one position forward every time we remove the first element.

In approach [B] there is no such overhead, but whenever we move head one position ahead, after removal of first element, the size on Queue is reduced by one space each time.

Algorithm for ENQUEUE operation

Algorithm for DEQUEUE operation

/* Below program is written in C++ language */

#include<iostream>

using namespace std;

#define SIZE 10

class Queue

{

int a[SIZE];

int rear; //same as tail

int front; //same as head

public:

Queue()

{

rear = front = -1;

}

//declaring enqueue, dequeue and display functions

void enqueue(int x);

int dequeue();

void display();

};

// function enqueue - to add data to queue

void Queue :: enqueue(int x)

{

if(front == -1) {

front++;

}

if( rear == SIZE-1)

{

cout << "Queue is full";

}

else

{

a[++rear] = x;

}

}

// function dequeue - to remove data from queue

int Queue :: dequeue()

{

return a[++front]; // following approach [B], explained above

}

// function to display the queue elements

void Queue :: display()

{

int i;

for( i = front; i <= rear; i++)

{

cout << a[i] << endl;

}

}

// the main function

int main()

{

Queue q;

q.enqueue(10);

q.enqueue(100);

q.enqueue(1000);

q.enqueue(1001);

q.enqueue(1002);

q.dequeue();

q.enqueue(1003);

q.dequeue();

q.dequeue();

q.enqueue(1004);

q.display();

return 0;

}To implement approach [A], you simply need to change the dequeue method, and include a for loop which will shift all the remaining elements by one position.

return a[0]; //returning first element

for (i = 0; i < tail-1; i++) //shifting all other elements

{

a[i] = a[i+1];

tail--;

}Complexity Analysis of Queue Operations

Just like Stack, in case of a Queue too, we know exactly, on which position new element will be added and from where an element will be removed, hence both these operations requires a single step.

- Enqueue: O(1)

- Dequeue: O(1)

- Size: O(1)

What is a Circular Queue?

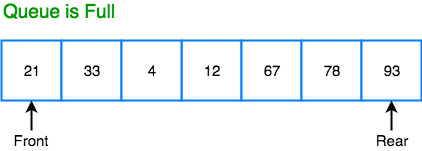

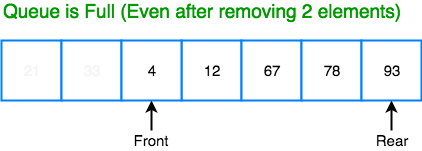

Before we start to learn about Circular queue, we should first understand, why we need a circular queue, when we already have linear queue data structure.

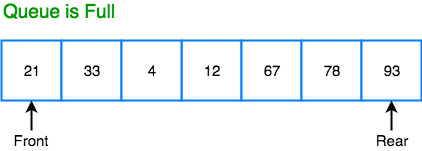

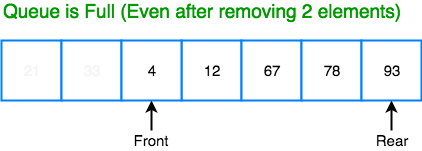

In a Linear queue, once the queue is completely full, it's not possible to insert more elements. Even if we dequeue the queue to remove some of the elements, until the queue is reset, no new elements can be inserted. You must be wondering why?

When we dequeue any element to remove it from the queue, we are actually moving the front of the queue forward, thereby reducing the overall size of the queue. And we cannot insert new elements, because the rear pointer is still at the end of the queue.

The only way is to reset the linear queue, for a fresh start.

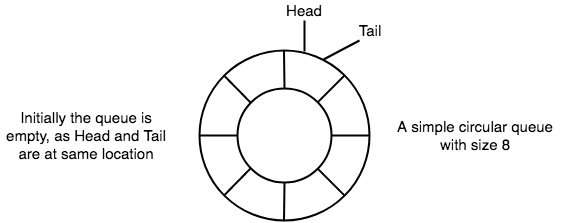

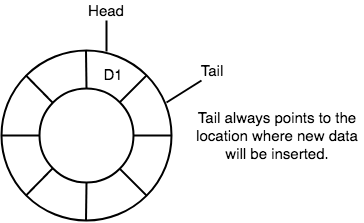

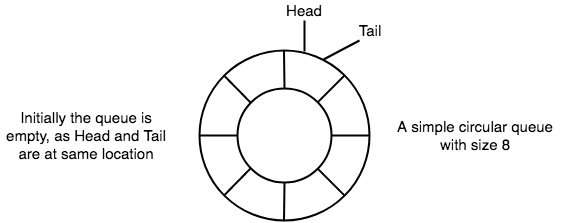

Circular Queue is also a linear data structure, which follows the principle of FIFO(First In First Out), but instead of ending the queue at the last position, it again starts from the first position after the last, hence making the queue behave like a circular data structure.

Basic features of Circular Queue

- In case of a circular queue,

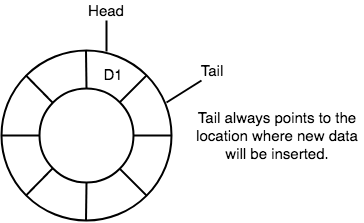

headpointer will always point to the front of the queue, andtailpointer will always point to the end of the queue. - Initially, the head and the tail pointers will be pointing to the same location, this would mean that the queue is empty.

- New data is always added to the location pointed by the

tailpointer, and once the data is added,tailpointer is incremented to point to the next available location.

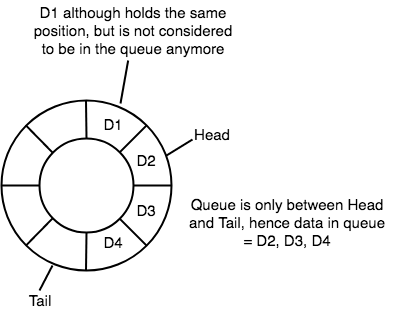

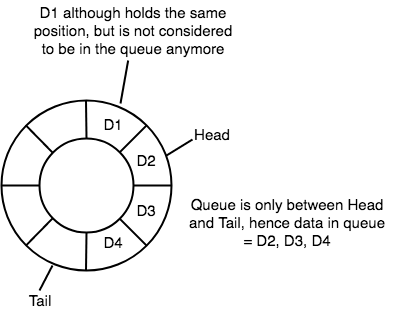

- In a circular queue, data is not actually removed from the queue. Only the

headpointer is incremented by one position when dequeue is executed. As the queue data is only the data betweenheadandtail, hence the data left outside is not a part of the queue anymore, hence removed.

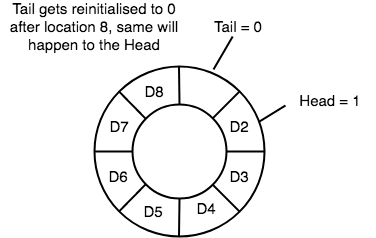

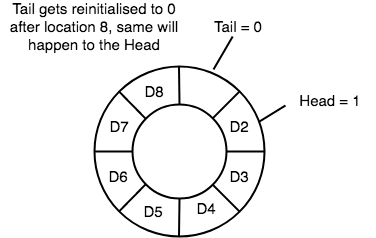

- The

headand thetailpointer will get reinitialised to 0 every time they reach the end of the queue.

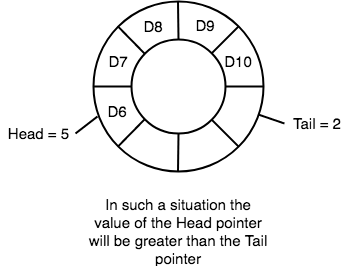

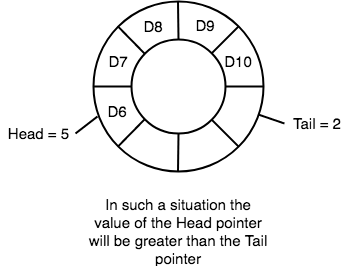

- Also, the

headand thetailpointers can cross each other. In other words,headpointer can be greater than thetail. Sounds odd? This will happen when we dequeue the queue a couple of times and thetailpointer gets reinitialised upon reaching the end of the queue.

Going Round and Round

Another very important point is keeping the value of the tail and the head pointer within the maximum queue size.

In the diagrams above the queue has a size of 8, hence, the value of tail and head pointers will always be between 0 and 7.

This can be controlled either by checking everytime whether tail or head have reached the maxSize and then setting the value 0 or, we have a better way, which is, for a value x if we divide it by 8, the remainder will never be greater than 8, it will always be between 0 and 0, which is exactly what we want.

So the formula to increment the head and tail pointers to make them go round and round over and again will be, head = (head+1) % maxSize or tail = (tail+1) % maxSize

Application of Circular Queue

Below we have some common real-world examples where circular queues are used:

- Computer controlled Traffic Signal System uses circular queue.

- CPU scheduling and Memory management.

Implementation of Circular Queue

Below we have the implementation of a circular queue:

- Initialize the queue, with size of the queue defined (

maxSize), andheadandtailpointers. enqueue: Check if the number of elements is equal to maxSize - 1:- If Yes, then return Queue is full.

- If No, then add the new data element to the location of

tailpointer and increment thetailpointer.

dequeue: Check if the number of elements in the queue is zero:- If Yes, then return Queue is empty.

- If No, then increment the

headpointer.

- Finding the

size:- If, tail >= head,

size = (tail - head) + 1 - But if, head > tail, then

size = maxSize - (head - tail) + 1

- If, tail >= head,

/* Below program is written in C++ language */

#include<iostream>

using namespace std;

#define SIZE 10

class CircularQueue

{

int a[SIZE];

int rear; //same as tail

int front; //same as head

public:

CircularQueue()

{

rear = front = -1;

}

// function to check if queue is full

bool isFull()

{

if(front == 0 && rear == SIZE - 1)

{

return true;

}

if(front == rear + 1)

{

return true;

}

return false;

}

// function to check if queue is empty

bool isEmpty()

{

if(front == -1)

{

return true;

}

else

{

return false;

}

}

//declaring enqueue, dequeue, display and size functions

void enqueue(int x);

int dequeue();

void display();

int size();

};

// function enqueue - to add data to queue

void CircularQueue :: enqueue(int x)

{

if(isFull())

{

cout << "Queue is full";

}

else

{

if(front == -1)

{

front = 0;

}

rear = (rear + 1) % SIZE; // going round and round concept

// inserting the element

a[rear] = x;

cout << endl << "Inserted " << x << endl;

}

}

// function dequeue - to remove data from queue

int CircularQueue :: dequeue()

{

int y;

if(isEmpty())

{

cout << "Queue is empty" << endl;

}

else

{

y = a[front];

if(front == rear)

{

// only one element in queue, reset queue after removal

front = -1;

rear = -1;

}

else

{

front = (front+1) % SIZE;

}

return(y);

}

}

void CircularQueue :: display()

{

/* Function to display status of Circular Queue */

int i;

if(isEmpty())

{

cout << endl << "Empty Queue" << endl;

}

else

{

cout << endl << "Front -> " << front;

cout << endl << "Elements -> ";

for(i = front; i != rear; i= (i+1) % SIZE)

{

cout << a[i] << "\t";

}

cout << a[i];

cout << endl << "Rear -> " << rear;

}

}

int CircularQueue :: size()

{

if(rear >= front)

{

return (rear - front) + 1;

}

else

{

return (SIZE - (front - rear) + 1);

}

}

// the main function

int main()

{

CircularQueue cq;

cq.enqueue(10);

cq.enqueue(100);

cq.enqueue(1000);

cout << endl << "Size of queue: " << cq.size();

cout << endl << "Removed element: " << cq.dequeue();

cq.display();

return 0;

}Inserted 10 Inserted 100 Inserted 1000 Size of queue: 3 Removed element: 10 Front -> 1 Elements -> 100 1000 Rear -> 2

What is a Circular Queue?

Before we start to learn about Circular queue, we should first understand, why Uwe need a circular queue, when we already have linear queue data structure.

In a Linear queue, once the queue is completely full, it's not possible to insert more elements. Even if we dequeue the queue to remove some of the elements, until the queue is reset, no new elements can be inserted. You must be wondering why?

When we dequeue any element to remove it from the queue, we are actually moving the front of the queue forward, thereby reducing the overall size of the queue. And we cannot insert new elements, because the rear pointer is still at the end of the queue.

The only way is to reset the linear queue, for a fresh start.